Static content in multiple regions. Solutions & pitfalls.

There are a lot of CDN solutions nowadays for static content hosting. But what if your customers are distributed around the world and you need maximum performance for all of them? We have tested a few multi-regional architectures on AWS and pleased to share with you a solution that works really great. And also we will touch some pitfalls that look great on paper but not possible to implement on AWS yet.

Table of Contents

Single region and CDN

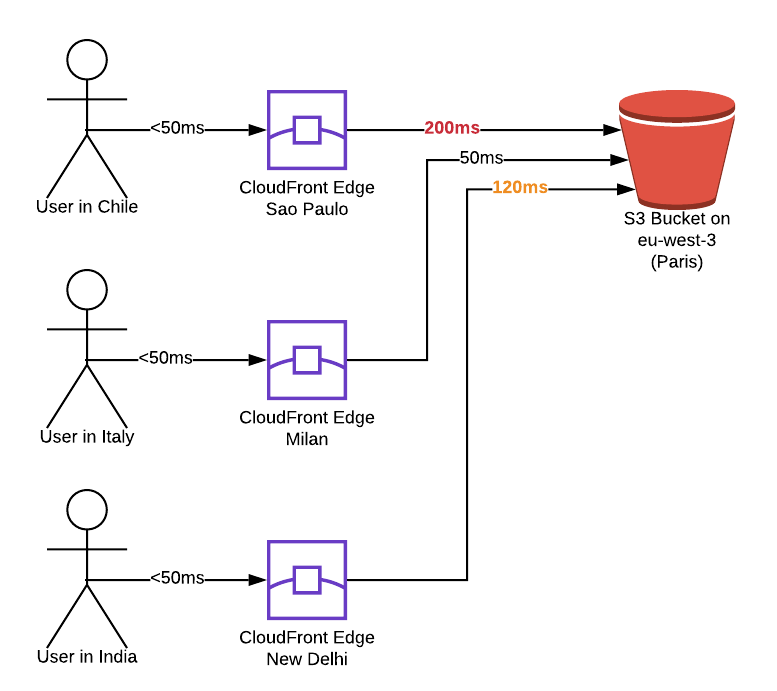

The classic solution for static website hosting is to use S3 bucket for objects and route traffic via Amazon CloudFront. Amazon CloudFront can resolve SSL connections close to user, it will do caching of the objects and traffic will be routed via AWS backbone network for maximum performance.

This solution is really popular. There are many modules on GitHub, for example:

Serverless modules for S3 static hosting:

Terraform modules for S3 static hosting:

CloudFront has a distributed architecture. It contists of multiple Edges (servers) around the world. This way cached content is stored very close to end users and can be retrieved very fast. Hovewer, fresh content, which is not cahed yet might be delivered not so fast. As all Edge locations download original content from a single S3 bucket. Latency between user and closest Edge is typically less then 50ms. However latency between Edge and origin S3 server could be 200ms and even more is they are located on different continents.

Can we solve this latency? Probably, ideal scenario will be to use CloudFront as CDN, but to have objects present in every region, in multiple S3 buckets, so that objects will be delivered to the closest Edge and then tothe user with minimized latency. Is AWS ready for this? Let's see.

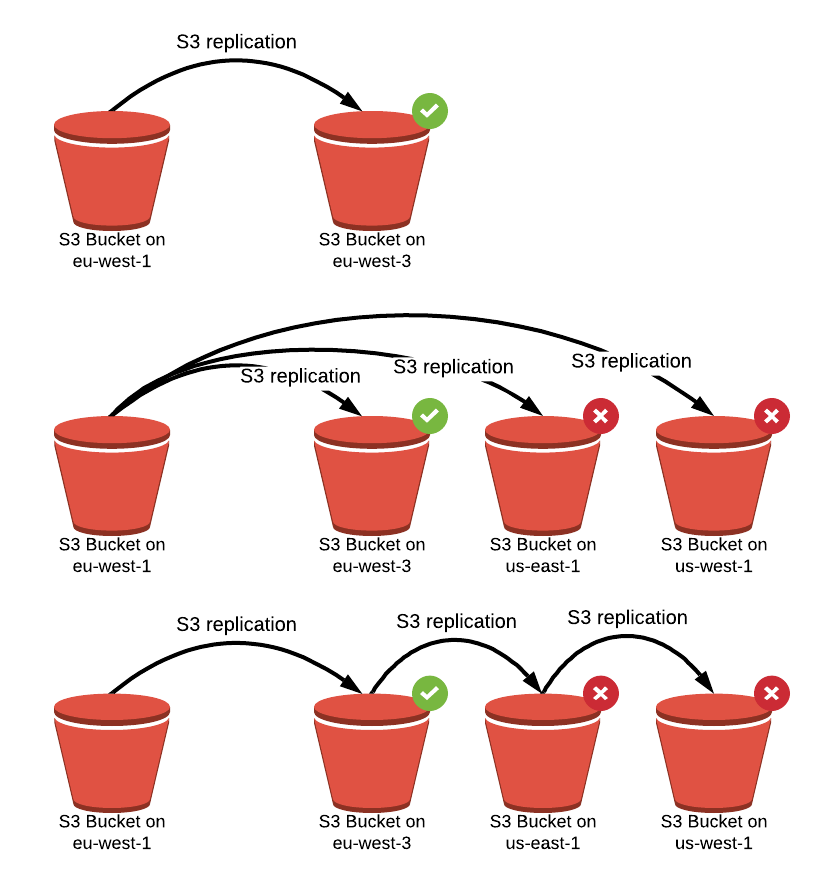

S3 replication for multi-region architectures

First idea that comes into mind is to use S3 replication. S3 replication it’s a feature from Amazon, which allows to replicate objects from one bucket to another. However after a few tries, I discovered that it’s not possible to replicate objects to multiple buckets. You can replicate only to 1 bucket. And it’s just not possible to configure replication to 2 buckets. So, it’s not gonna work. Ok, maybe then we can configure replication by chain? So, first bucket replicates to second, then second bucket replicates to third? Unfortunately not, it’s not gonna work. And only one solution that Amazon left to us is to do replication manually.

Multi-region pitfalls of Route53

Our next thing to solve is how to route traffic between these s3 buckets. Even though, CloudFront have multiple edge locations, it’s still one system, so it should be one configuration. And this configuration should understand, to which bucket should every request go. Solution from Amazon is Route53 latency based records. However in this case they are not gonna work. Because Route 53 latency based records work in case target is a load balancer or some static IP address. But it can’t work if target is S3 bucket. So, unfortunately, it’s not possible as well.

Route53 latency based records work fine load balancers, API gateways, but they can't distribute traffic beetween S3 buckets or between CloudFront distributions.

The reason for this is that S3 bucket (as well as CloudFront distribution) does not have a dedicated IP address. Multiple S3 buckets differentiate each other via "host" header. But Route53 is not able to change host header in request based on latency. Host header is just forwarded, and whole traffic will go to same S3 bucket all the time.

Conclusion

The only one solution which is left to us is our original - very simple - just one bucket and CloudFront distribution. Yeah, it has some downsides when S3 bucket is too far away from end users - some latency might be. However, it’s only the first time, and then for cached objects it will be fast anyway. And also it’s only one solution nowadays which is possible with AWS.

I would recommend to use just S3 in single region + CloudFront. Don’t try to configure any complex stuff with multiple S3 buckets, because in most of the cases it’s not gonna work. The only thing is that in case it’s for production - don’t forget to add S3 bucket for access logs. To be able to analyse in future, who is coming to your website. And also, Web Application firewall, which is very handy if you need to block traffic to your website for certain users, for certain IP addresses , etc.

Deployment tips

Tips time!

Cloudfront is known of it's long configuration time. After you do changes yourself, it takes some time for Amazon to apply your configuration.

What do you think, how much time would it take for Amazon to configure a fresh CloudFront + S3 bucket? The answer really depends in which region the bucket is. In case the bucket is in Virginia region (us-east-1) - then setup time will be 1h.

In case S3 bucket is in any other regions - then setup time is really increased. Average is maybe 2h, but could be up to 12h.

When you are hesitating in which region to put S3 bucket - just put it to us-east-1. Otherwise just be aware that setup time could be longer, then expected.

Than’t it guys. Thanks for reading.

In case you see that something is not correct any more - please leave me a comment under YouTube video.

See you next time!